![[New] Superior Imaging Why HDR Sets the Standard Over SDR](https://thmb.techidaily.com/5996397f505d52b0f60ffe77c36fd8859621590a57dd0707f44eeaa06c560dbc.jpg)

"[New] Superior Imaging Why HDR Sets the Standard Over SDR"

Superior Imaging: Why HDR Sets the Standard Over SDR

In the early days of photo and video display, there weren’t many choices beyond the color of the set. However, at present, the dimensions and contrast ratio of images have been common considerations.

Continue reading to learn more about the difference between SDR and HDR, if you are seriously considering upgrading to it.

What are the High-Dynamic Range (HDR) and Standard-Dynamic Range (SDR)?

Let us discuss what SDR and HDR are in general before delving into what makes them different.

SDR, to start, stands for Standard Dynamic Range and is the basic camera and video display standard at this time. It incorporates a conventional version of the gamma curve signal to showcase videos and images. The conventional gamma curve guarantees more luminance, ranging at 100 cd/m2 based on cathode ray tube or CRT limits that it focuses on.

On the other hand, HDR or High Dynamic Range is an advanced feature level for photography. While SDR TV meaning is common to see, HDR is available for TVs, smartphones, monitors, and different digital equipment types for better visual clarity. While capturing images in HDR mode, you would notice a higher quality blending and contrast of highlights and shadows in scenes.

SDR vs. HDR: Why is SDR fading in popularity?

In comparing HDR vs. SDR, it is simple to put that the former is a heightened version of the latter in terms of color clarity, balance, and light effect. Before discussing their differences in detail and showing why HDR is a better successor to SDR display, here are the overall findings in tabular form.

| HDR | SDR | |

|---|---|---|

| Main Enhancement Quality | HDR photography and TV effects guarantee better color, exposure, and detailed benefits. It offers a dynamic range effect in color vibrancy, cohesively enhancing the dark and light parts. | SDR does not offer a very high dynamic range and holds a limited color gamut and range. The underexposure contrast and brightness levels are slightly more muted. |

| Brightness | The brightness can adjust between 1 nit to 1000 nits on scenes. | The brightness level for SDR devices ranges between 100-300 bits. |

| Color Depth | HDR allows color depth support for 8-bit/10-bit/12-bit variety. | 10-bit SDR is available, but the most common standard is the 8-bit version. |

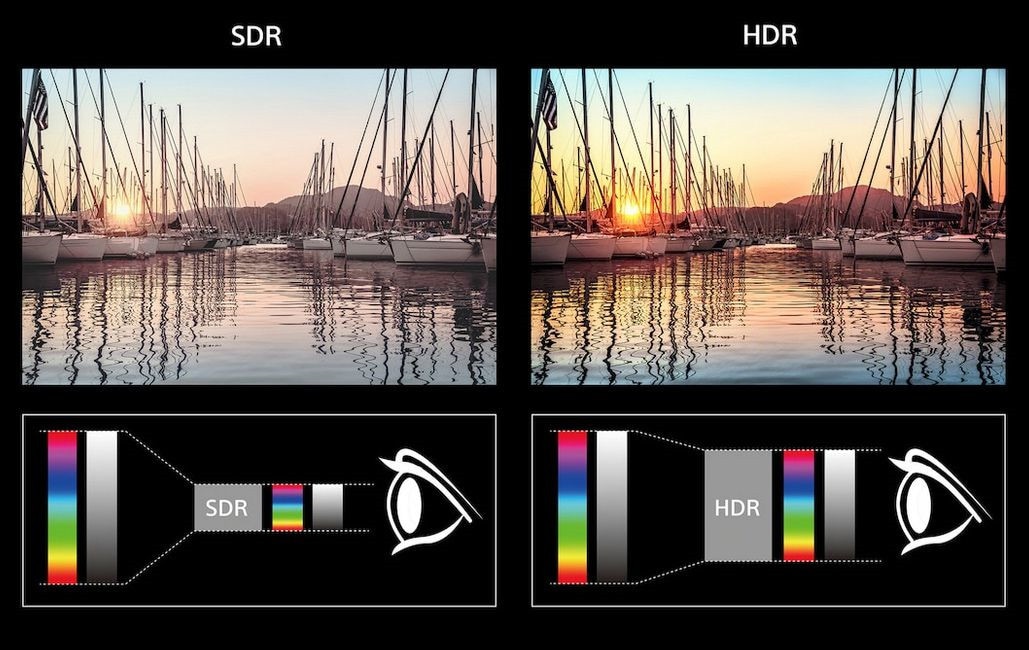

| Color Gamut | HDR supports a color gamut of Rec.2020 and adopts the P3 version mostly. | It works with the Rec.709 type of color gamut range. |

| Size Availability | It works with bigger-sized files and offers more exposure/color detailing for realistic quality images. | It does not require a huge storage size since it does not process very complex data. |

| Internet Speed Support | It requires very fast connectivity to work properly. | Works with slow-medium internet speed well. |

While these are notable points of difference, let us discuss the difference between HDR and SDR in terms of color resolution and quality for further detailing.

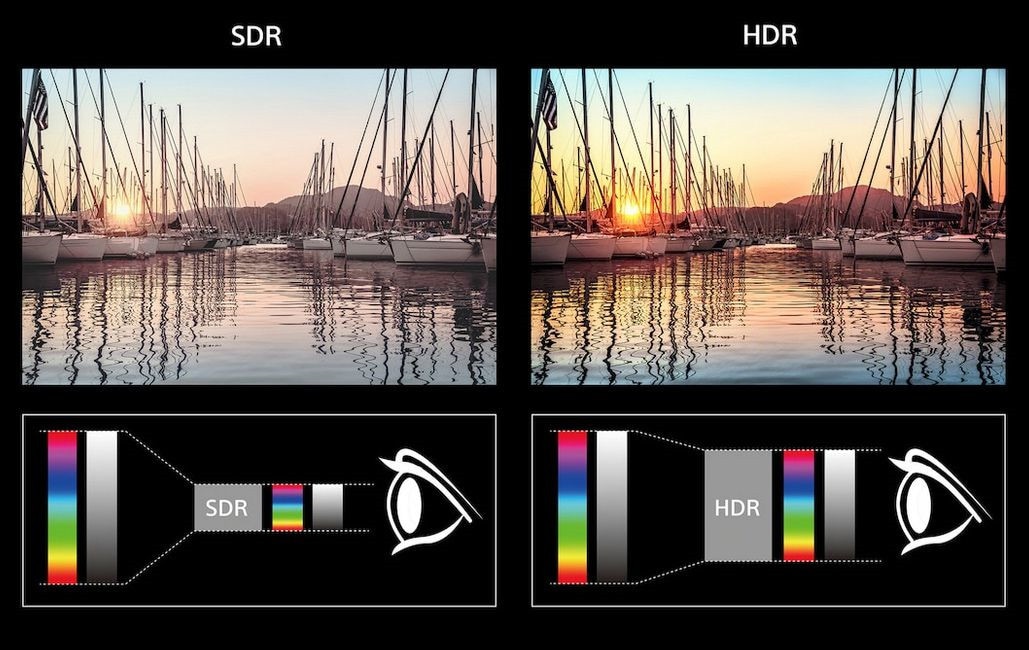

Color and Contrast

Televisions and monitors with a wide color gamut support display various colors. Therefore, compared to standard-level ones, these devices support more saturation, which is more compatible with HDR/SDR. Therefore, when it comes to visual impact, HDR is a better contender.

- Color gamut

Most older monitors that supported SDR worked with a limited color gamut, even the ones that are active currently. To note, ‘color gamut’ indicates the saturation level of televisions.

The difference in terms of color gamut is visible in reds and greens. Mostly, however, this does not relate mainly to HDR. Many low-end HDR types also support a smaller color gamut, so the color depth difference is mostly visible in HDR or SDR TV with wider color gamut support.

- Color depth

Similarly, the type of TV or monitor you use is important for color depth consideration. The type of SDR, meaning tv-related, that supports a wide palette of colors would have more chances to display color vibrancy for objects on TV, like the clear red gradients in apples.

An 8-bit television will support 256 shades of blue, green, and red, i.e., 16.7 million color variations. Comparatively, 10-bit TVs support 1.07 billion colors since they come with 1024 shades. SDR monitor types are typically available in 8-bit types, so the images on the screen have lower color depth. Thus, the color gradients look uneven, and images appear blocky.

In comparison, most devices that support HDR are 8-bit, 10-bit, or 12-bit TVs. They show clearer images with vibrant color variations and more gradients.

Naturally, if you want an impactful image/video quality, HDR is a superior choice.

Project Manager - Asset Browser for 3Ds Max

Project Manager - Asset Browser for 3Ds Max

Compatibility

It is important to consider which types of devices or setups work with these dynamic range variations, especially for HDR and SDR gaming.

Check if the graphics card you are using supports HDR. Typically, HDR works with DisplayPort 1.3 and HDMI 2.0 ports suitably well. Thus, if you see any of these ports in the GPU you use, it can safely showcase HDR content. For example, the Nvidia 9xx series GPU types and its subsequent versions come with HDMI 2.0 ports. AMD graphics cards starting from the 2016 version, also support these ports.

Display compatibility is also important to consider for understanding which monitors can display HDR content. While there are 1080p SDR displays, this resolution is a relatively lower support choice for HDR-compatible displays. For better results, choose 4K-supported monitors, especially those that can display HDR10 content.

In terms of photography-based differences for SDR vs. HDR, you must also understand the differences in encoded format support. The SDR formats must relate to Rec.709/sRGBgamut mainly. On the other hand, HDR works with Rec.2020 version for WCG (wide color gamut).

Key features:

• Import from any devices and cams, including GoPro and drones. All formats supported. Сurrently the only free video editor that allows users to export in a new H265/HEVC codec, something essential for those working with 4K and HD.

• Everything for hassle-free basic editing: cut, crop and merge files, add titles and favorite music

• Visual effects, advanced color correction and trendy Instagram-like filters

• All multimedia processing done from one app: video editing capabilities reinforced by a video converter, a screen capture, a video capture, a disc burner and a YouTube uploader

• Non-linear editing: edit several files with simultaneously

• Easy export to social networks: special profiles for YouTube, Facebook, Vimeo, Twitter and Instagram

• High quality export – no conversion quality loss, double export speed even of HD files due to hardware acceleration

• Stabilization tool will turn shaky or jittery footage into a more stable video automatically.

• Essential toolset for professional video editing: blending modes, Mask tool, advanced multiple-color Chroma Key

Additionally, consider the stops that the SDR or HDR camera shoots in for calculating dynamic range variation. This affects the gamma/bit depth of images. HDR assures higher levels of dynamic range, handling 17.6 stops, while most SDR displays do 6 stops in terms of dynamic range.

Is photo and camera phone HDR as same as TV HDR?

When evaluating the difference between HDR and SDR, it is important to consider what it relates to phone-based photography or television. The type of HDR you consider for either type is not mutually exclusive.

For most people, it can get confusing to differentiate between TV-centric HDR and photography-centric HDR. However, other than the name, they are vastly different. Here are the meanings:

- Photo HDR- This HDR effect relates to the process of combining many pictures that hold varying exposures. Then, it creates one image replicating a look of a higher dynamic range.

- TV HDR- This focuses on expanding the TV’s color palette and contrast ratio. Therefore, the displayed imagery will look more natural and vibrant.

Therefore, the purpose of TV HDRs is to display realistic scenes with optimizing color, brightness, and contrast than most television monitors. When you click a picture with an HDR camera like iPhones, you can activate the HDR Mode to get better color balance, deghost unnecessary elements, and reduce blurry sections.

So, with the Photo HDR setting, you can change the image quality, but TV HDR only focuses on improving the display quality.

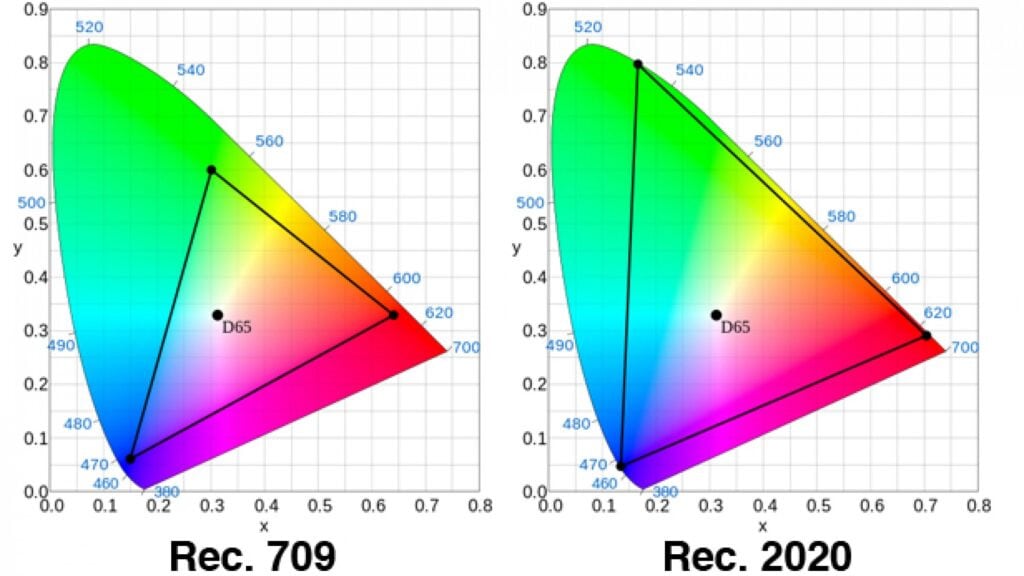

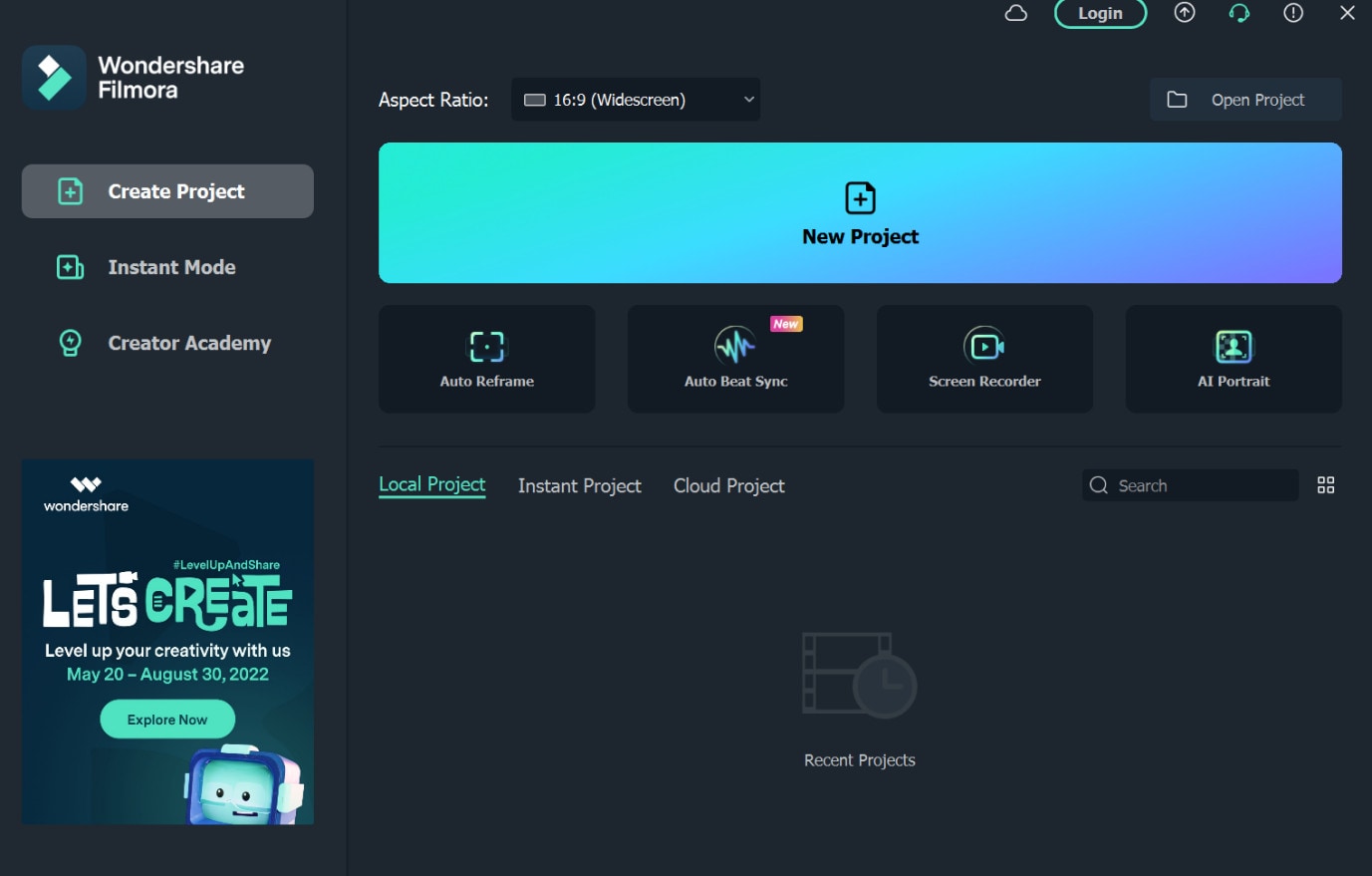

How to Edit and Deliver your HDR videos to YouTube with Wondershare Filmora?

Speaking of Photo HDR, you can optimize your captured videos with HDR effect and post them on social media. If you want to do that, you can use the top-grade video editor app, i.e., Wondershare Filmora .

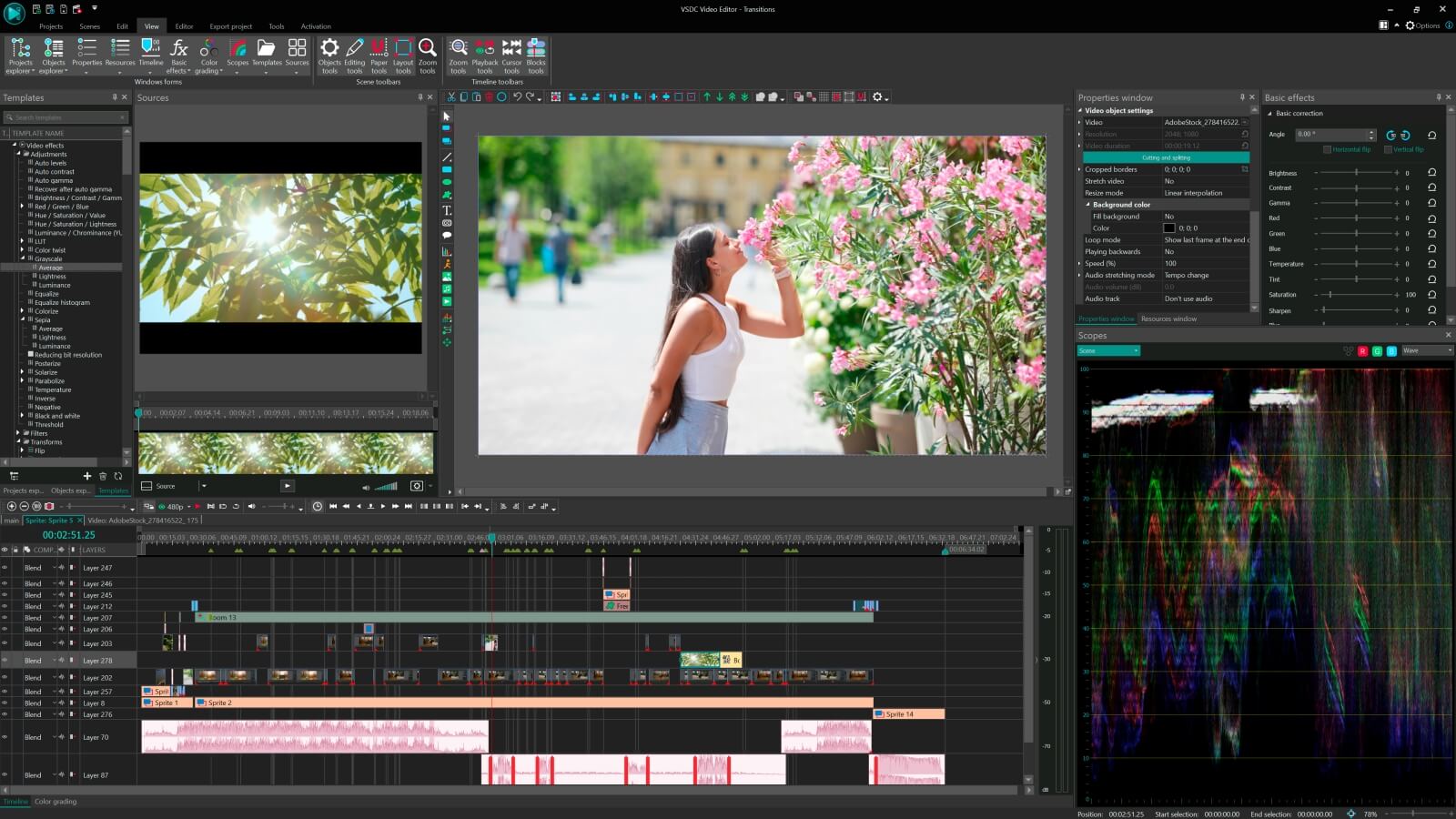

It comes with editing HD/4K videos and continues to receive new features, such as color matching, audio-syncing for limited multicam, motion tracking, and speech to text. Moreover, it can work with huge files and is compatible with various video and image format types for input and output, like MP4, WMV, 3GP, OGG or WEBM format. These tech specifications make Filmora a suitable option for editing HDR videos.

How to edit HDR video and export HDR with Filmora?

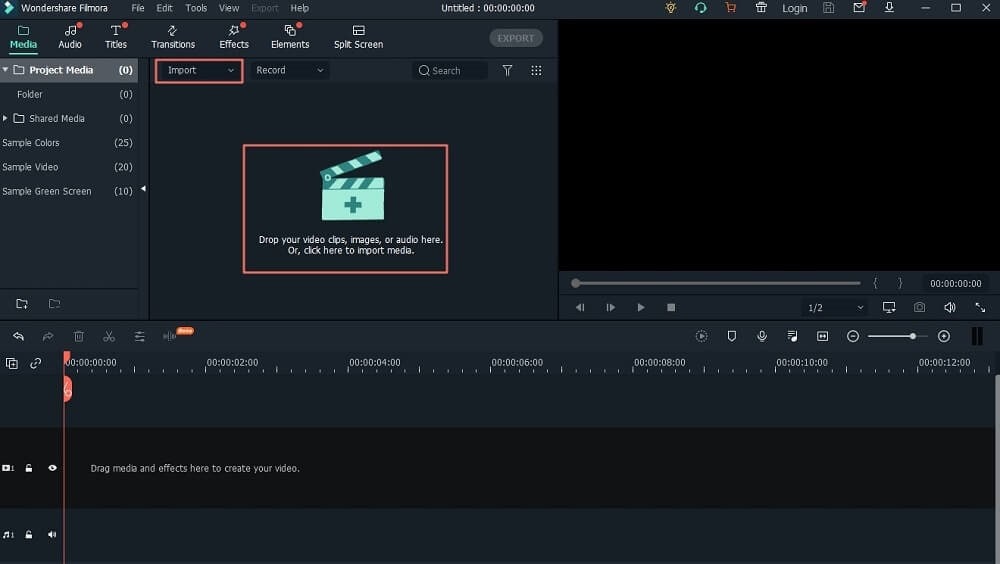

Step1Install and launch the Filmora software.

Step2Click on New Project.

Step3When the editing screen opens, click on the “Click here to import media” option. Add the HDR-quality video stored from your iPhone or other mobile phones in the PC library.

Step4Drag and drop it into the editing timeline at the bottom of the screen. Add more video clips if you are merging them.

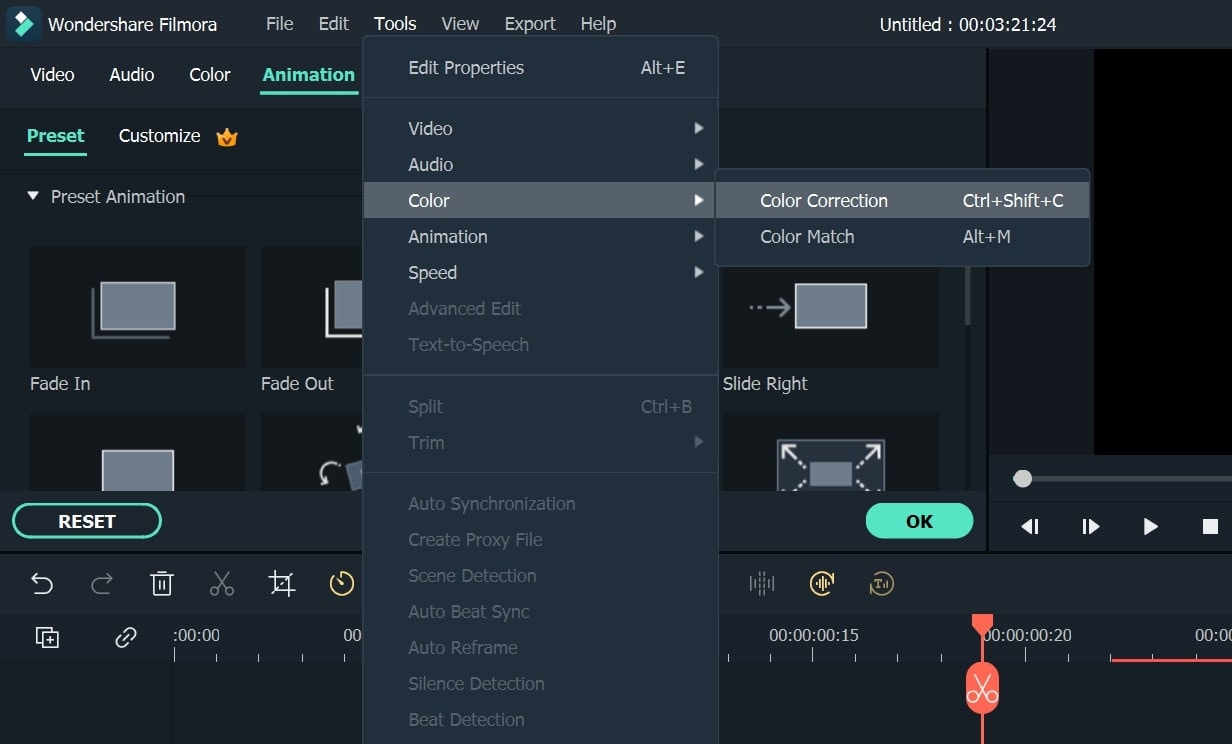

Step5Make further edits like Color Correction, Motion Tracking, Audio Effects, Animations, and Keyframing as you want.

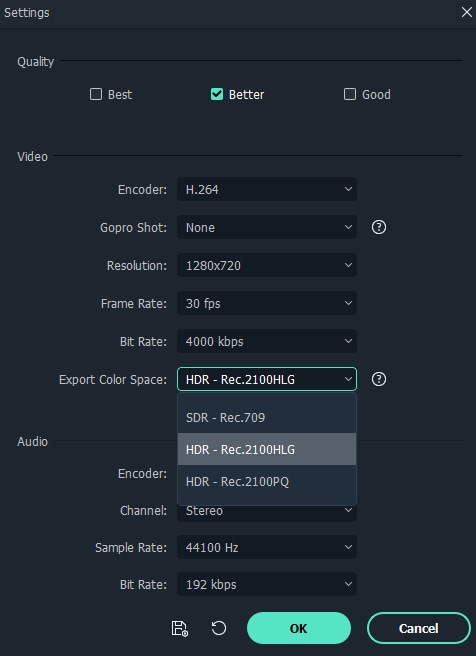

Step6Click the Export button after all editing work completes.

Step7Press YouTube tab to log in.

Step8Change file settings, add the video title, choose a category, and click on the Export button to post HDR video to YouTube.

Final Words

SDR quality is available in most televisions, monitors, and photography platforms/devices. But for enhanced video and image quality with higher color depth, visual contrast, and enhancement, HDR is a better choice. You should check for the display and GPU support first, besides other specifications, to understand which devices will work with HDR.

Free Download For Win 7 or later(64-bit)

Free Download For macOS 10.14 or later

- Title: [New] Superior Imaging Why HDR Sets the Standard Over SDR

- Author: Donald

- Created at : 2024-08-21 16:49:27

- Updated at : 2024-08-22 16:49:27

- Link: https://some-tips.techidaily.com/new-superior-imaging-why-hdr-sets-the-standard-over-sdr/

- License: This work is licensed under CC BY-NC-SA 4.0.

PaperScan Professional: PaperScan Scanner Software is a powerful TWAIN & WIA scanning application centered on one idea: making document acquisition an unparalleled easy task for anyone.

PaperScan Professional: PaperScan Scanner Software is a powerful TWAIN & WIA scanning application centered on one idea: making document acquisition an unparalleled easy task for anyone.